How Bazaarvoice moved 300 million shoppers to a new generation platform Part 2

{This is the second in a 3 part series of posts intended to tell an impressive story of how we have been able to achieve an epic rearchitecture of our core platform. Special thanks to all of those who have helped in review and editing the original white paper.}

The Journey Begins

One of the first things we decided to tackle was to start moving analytics and reporting off the existing platform so that we could deliver new insights to our clients showing how reviews are used by shoppers in their purchase decisions. Â This choice also enabled us to decouple the architecture and spin up parallel teams to speed delivery. To deliver these capabilities, we adopted big data architectures based on Hadoop and HBase to be able to assimilate hundreds of millions of web visits into analytics that would paint the full shopper journey picture for our clients. Â By running map reduce over the large set of review traffic and purchase data, we are able to give our clients insight into these shopper behaviors and help our clients better understand the return on investment they receive from consumer generated content. Â As we built out this big data architecture, we also saw the opportunity to offload reporting from the review display engine. Â Now, all our new reporting and insight efforts are built off this data and we are actively working to move existing reporting functionality to this big data architecture.

On the front end, flexibility and mobile was a huge driver in our rearchitecture.  Our original template-driven, server-side rendering can provide flexibility, but that ultimate flexibility is only required in a small number of use cases.  For the vast majority, a client-side rendering via javascript with behavior that can be configured through a simple UI would yield a better mobile-enabled shopping experience that’s easier for clients to control.  We made the call early on not to try to force migration of clients from one front end technology to another.  For one thing, it’s not practical for a first version of a product to be 100% feature function capable to the predecessor.  For another, there was just simply no reason to make clients choose.  Instead, as clients redesigned their sites and as new clients were onboard, they opt’ed in to the new front end technology.

We attracted some of the top javascript talent in the country to this ambitious undertaking. There are some very interesting details of the architecture we built that have been described on our developer blog and that are available as open source projects on in our bazaarvoice github organization. Look for the post describing our Scoutfile architecture in March of 2015. The BV team is committed to giving back to the Open Source community and we hope this innovation helps you in your rearchitecure journey.

On the backend, we took inspiration from both Google and Netflix. It was clear that we needed to build an elastic, scalable, reliable, cloud-based data store and query layer. We needed to reorganize our engineering team into autonomous service oriented teams that could move faster. We needed to hire and build new skills in new technologies. Â We needed to be able to roll this out as transparently as possible to our clients while serving live shopping traffic so no one knows its happening at all. Â Needless to say, we had our work cut out for us.

For the foundation of our new architecture, we chose Cassandra, an Open Source NoSQL data solution based on influence of ideas from Google and their BigTable architecture. Â Cassandra had been battle hardened at Netflix and was a great solution for a cloud resilient, reliable storage engine. On this foundation we built a service we call Emo, originally intended for sentiment analysis. Â As we made progress towards delivery, we began to understand the full potential of Cassandra and its NoSQL based architecture as our primary display storage.

With Emo, we have solved the potential data consistency issues of Cassandra and guarantee ACID database operations. We can also seamlessly replicate and coordinate a consistent view of all the rating and review data across AWS availability zones worldwide, providing a scalable and resilient way to serve billions of shoppers. Â We can also be selective in the data that replicates for example from the European Union (EU) so that we can provide assurances of privacy for EU based clients. In addition to this consistency capability, Emo provides a databus that allows any Bazaarvoice service to listen for the kinds of changes the service particularly needs, perfect for a new service oriented architecture. For example, a service can listen for the event of a review passing moderation which would mean that it should now be visible to shoppers.

While Emo/Cassandra gave us many advantages, its NoSQL query capability is limited to what Cassandra’s key-value paradigm. We learned from our experience with Solr that having a flexible, scalable query layer on top of the master datastore resulted in significant performance advantages for calculating on-demand results of what to display during a shopper visit. This query layer naturally had to provide the distributed advantages to match Emo/Cassandra. We chose ElasticSearch for our architecture and implemented a flexible rules engine we call Polloi to abstract the indexing and aggregation complexities away from engineers on teams that would use this service.  Polloi hooks up to the Emo databus and provides near real time visibility to changes flowing into Cassandra.

The rest of the monolithic code base was reimplemented into services as part of our service oriented architecture. Since your code is a direct reflection of the team, as we took on this challenge we formed autonomous teams that owned everything full cycle from initial conception to operation in production. We built the teams with all the skills needed for success: product owners, developers, QA engineers, UX designers (for front end), DevOps engineers, and tech writers. We built services that managed the product catalog, UI Configuration, syndication edges, content moderation, review feeds, and many more. Â We have many of these rearchitected services now in production and serving live traffic. Some examples include services that perform the real time calculation of what Brands are syndicating consumer generated content to which Retailers, services that process client product catalog feeds for 100s of millions of products, new API services, and much more.

To make all of the above more interesting, we also created this service-oriented architecture to leverage the full power of Amazon’s AWS cloud. It was clear we had the uncommon opportunity to build the platform from the ground up to run in the cloud with monitoring, elastic resiliency, and security capabilities that were unavailable in previous data center environments. Â With AWS, we can take advantage of new hardware platforms with a push of a button, create multi datacenter failover capabilities, and use new capabilities like elastic MapReduce to deliver big data analytics to our clients. Â We build auto-scaling groups that allow our services to automatically add compute capacity as client traffic demands grow. We can do all of this with a highly skilled team that focuses on delivering customer value instead of hardware procurement, configuration, deployment, and maintenance. Â Â

So now after two plus years of hard work, we have a modern, scalable service-oriented solution that can mirror exactly the original monolithic service. But more importantly, we have a production hardened new platform that we will scale horizontally for the next 10 years of growth. Â We can now deliver new services much more quickly leveraging the platform investment that we have made and deliver customer value at scale faster than ever before.

So how did we actually move 300 million shoppers without them even knowing?

Divide and Conquer

As Engineers, we often like nice clean solutions that don’t carry along what we like to call technical debt.  Technical debt literally is stuff that we have to go back to fix/rewrite later or that requires significant ongoing maintenance effort.  In a perfect world, we fire up the the new platform and move all the traffic over.  If you find that perfect world, please send an Uber for me. Add to this the scale of traffic we serve at Bazaarvoice, and it’s obvious it would take time to harden the new system.

The secret to how we pulled this off lies in the architecture choices to break apart the challenge into two parts: frontend and backend. Â While we reengineered the front-end into the the new javascript solution, there were still thousands of customers using the template-based front end. Â So, we took the original server side rendering code and turned it into a service talking to our new Polloi service. Â This enabled us to handle request from client sites exactly like the Classic original system.

Also, we created a service improved upon the original API but was compatible from a specific version forward. Â We chose to not try to be compatible for all version for all time, as all APIs go through evolution and deprecation. Â We naturally chose the version that was compatible with the new Javascript front end. Â With these choices made, we could independently decide when and how to move clients to the new backend architecture irrespective of the front-end service they were using.

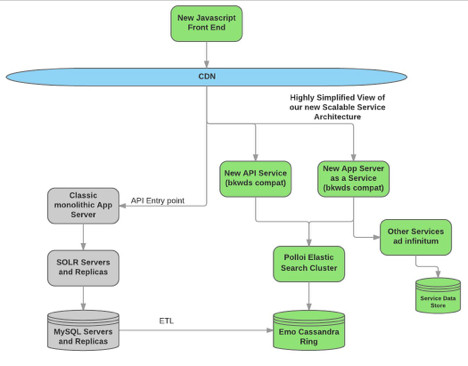

A simplified view of this architecture looks like this:

With the above in place, we can switch a Javascript client to use the new version of the API through just changing the endpoint of the API key. Â For a template-based client, we can change the endpoint to the new referring service through a configuration in our CDN Akamai.

Testing for compatibility is a lot of work, though not particularly difficult. API compatibility is pretty straight forward, which testing whether a template page renders correctly is a little more involved especially since those pages can be highly customized. Â We found the most effective way to accomplish the later since it was a one time event was with manual inspection to be sure that the pages rendered exactly the same on our QA clusters as they did in the production classic system.

Success we found early on was based on moving cohorts of customers together to the new system. At first we would move a few at a time, making absolutely sure the pages rendered correctly, monitoring system performance, and looking for any anomalies. Â If we saw a problem, we could move them back quickly through reversing the change in Akamai. At first much of this was also manual, so in parallel, we had to build up tooling to handle the switching of customers, which even included working with Akamai to enhance their API so we could automate changes in the CDN.

From moving a few clients at a time, we progressed to moving over 10s of clients at a time. Through a tremendous engineering effort, in parallel we improved the scalability of our ElasticSearch clusters and other systems which allowed us to move 100s of clients at a time, then 500 clients at time. As of this writing, we’ve moved over 5,000 sites and 100% of our display traffic is now being served from our new architecture. Â

More than just serving the same traffic as before, we have been able to move over display traffic for new services like our Curations product that takes in and processes millions of tweets, Instagram posts, and other social media feeds.  That our new architecture could handle without change this additional, large-scale use case is a testimony to innovative engineering and determination by our team over the last 2+ years. Our largest future opportunities are enabled because we’ve successfully been able to realize this architectural transformation.

{more to come in part 3}